In my previous articles Why Should We Follow Method Overloading Rules, I discussed about method overloading and rules we need to follow to overload a method. I have also discussed why we need to follow these rules and why some method overloading rules are necessary and others are optional.

In a similar manner in this article, we will see what rules we need to follow to override a method and why we should follow these rules.

Overriding means redefining a behaviour (method) again in the child class which was already defined by its parent class but to do so overriding method in the child class must follow certain rules and guidelines.

With respect to the method it overrides, the overriding method must follow following rules.

To understand these reasons properly let's consider below example where we have a class

Class

And we know in case of method overriding we can make polymorphic calls. Which means if we assign a child instance to a parent reference and call an overridden method on that reference eventually the method from child class will get called.

Let's do that

As discussed in How Does JVM Handle Method Overloading and Overriding Internally till compilation phase compiler thinks the method is getting called from the parent class. While bytecode generation phase compiler generates a

During runtime, JVM creates a

First JVM creates a

You can read it more clearly on How Does JVM Handle Method Overloading and Overriding Internally if it seems hard.

So as of now we are clear that

But you may be asking how it is handled physically from

Now suppose if

And this why having a different return type is not allowed by the compiler in the first place.

So to avoid this uncertainty, assigning restrictive access to the overriding method in the child class is not allowed at all.

So making the overriding method less restrictive cannot create any problem in the future and that's it is allowed.

Now suppose

Because for compiler the method is getting called from

That's why it is prevented at the compiler level itself and we are not allowed to throw any new or broader checked exception because it will not be handled by JVM at the end.

And we know unchecked exception (subclasses of

And that's why overriding methods are allowed to throw narrower checked and other unchecked exceptions.

To force our code to adhere method overriding rules we should always use

You can find complete code on this Github Repository and please feel free to provide your valuable feedback.

In a similar manner in this article, we will see what rules we need to follow to override a method and why we should follow these rules.

Method Overriding and its Rules

As discussed in Everything About Method Overloading Vs Method Overriding, every child class inherits all the inheritable behaviour from its parent class but the child class can also define its own new behaviours or override some of the inherited behaviour.Overriding means redefining a behaviour (method) again in the child class which was already defined by its parent class but to do so overriding method in the child class must follow certain rules and guidelines.

With respect to the method it overrides, the overriding method must follow following rules.

To understand these reasons properly let's consider below example where we have a class

Mammal which defines readAndGet method which is reading some file and returning an instance of class Mammal.Class

Human extends class Mammal and overrides readAndGet method to return instance of Human instead of instance of Mammal.class Mammal {

public Mammal readAndGet() throws IOException {//read file and return Mammal`s object}

}

class Human extends Mammal {

@Override

public Human readAndGet() throws FileNotFoundException {//read file and return Human object}

}

And we know in case of method overriding we can make polymorphic calls. Which means if we assign a child instance to a parent reference and call an overridden method on that reference eventually the method from child class will get called.

Let's do that

Mammal mammal = new Human();

try {

Mammal obj = mammal.readAndGet();

} catch (IOException ex) {..}

As discussed in How Does JVM Handle Method Overloading and Overriding Internally till compilation phase compiler thinks the method is getting called from the parent class. While bytecode generation phase compiler generates a

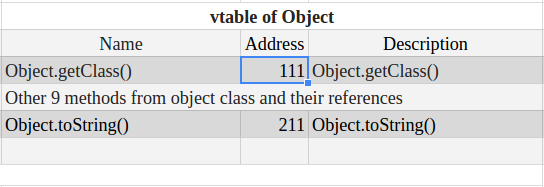

constant pool where it maps every method string literal and class reference to a memory referenceDuring runtime, JVM creates a

vtable or virtual table to identify which method is getting called exactly. JVM creates a vtable for every class and it is common for all the objects of that class. Mammal row in a vtable contains method name and memory reference of that method.First JVM creates a

vtable for the parent class and then copy that parent's vtable to child class's vtable and update just the memory reference for the overloaded method while keeping the same method name.You can read it more clearly on How Does JVM Handle Method Overloading and Overriding Internally if it seems hard.

So as of now we are clear that

- For compiler

mammal.readAndGet()means method is getting called from instance of classMammal - For JVM

mammal.readAndGet()is getting called from a memory address whichvtableis holding forMammal.readAndGet()which is pointing to a method call from classHuman.

Why overriding method must have same name and same argument list

Well conceptuallymammal is pointing to an object of class Human and we are calling readAndGet method on mammal, so to get this call resolved at runtime Human should also have a method readAndGet. And if Human have inherited that method from Mammal then there is no problem but if Human is overriding readAndGet, it should provide the same method signature as provided by Mammal because method has been already got called according to that method signature.But you may be asking how it is handled physically from

vtables so I must tell you that, JVM creates a vtable for every class and when it encounters an overriding method it keeps the same method name (Mammal.readAndGet()) while just update the memory address for that method. So both overridden and overriding method must have same method and argument list.Why overriding method must have same or covariant return type

So we know, for compiler the method is getting called from classMammal and for JVM call is from the instance of class Human but in both cases, readAndGet method call must return an object which can be assigned to obj. And since obj is of the type Mammal it can either hold an instance of Mammal class or an instance of a child class of Mammal (child of Mammal are covariant to Mammal).Now suppose if

readAndGet method in Human class is returning something else so during compile time mammal.readAndGet() will not create any problem but at runtime, this will cause a ClassCastException because at runtime mammal.readAndGet() will get resolved to new Human().readAndGet() and this call will not return an object of type Mammal.And this why having a different return type is not allowed by the compiler in the first place.

Why overriding method must not have a more restrictive access modifier

The same logic is applicable here as well, call toreadAndGet method will be resolved at runtime and as we can see readAndGet is public in class Mammal, now suppose- If we define

readAndGetasdefaultorprotectedinHumanbut Human is defined in another package - If we define

readAndGetasprivateinHuman

readAndGet is getting called from class Mammal but in both cases, JVM will not be able to access readAndGet from Human because it will be restricted.So to avoid this uncertainty, assigning restrictive access to the overriding method in the child class is not allowed at all.

Why overriding method may have less restrictive access modifier

IfreadAndGet method is accessible from Mammal and we are able to execute mammal.readAndGet() which means this method is accessible. And we make readAndGet less restrictive Human which means it will be more open to get called.So making the overriding method less restrictive cannot create any problem in the future and that's it is allowed.

Why overriding method must not throw new or broader checked exceptions

BecauseIOException is a checked exception compiler will force us to catch it whenever we call readAndGet on mammalNow suppose

readAndGet in Human is throwing any other checked exception e.g. Exception and we know readAndGet will get called from the instance of Human because mammal is holding new Human().Because for compiler the method is getting called from

Mammal, so the compiler will force us to handle only IOException but at runtime we know method will be throwing Exception which is not getting handled and our code will break if the method throws an exception.That's why it is prevented at the compiler level itself and we are not allowed to throw any new or broader checked exception because it will not be handled by JVM at the end.

Why overriding method may throw narrower checked exceptions or any unchecked exception

But ifreadAndGet in Human throws any sub-exception of IOException e.g., FileNotFoundException, it will be handled because catch (IOException ex) can handle all child of IOException.And we know unchecked exception (subclasses of

RuntimeException) are called unchecked because we don't need to handle them necessarily.And that's why overriding methods are allowed to throw narrower checked and other unchecked exceptions.

To force our code to adhere method overriding rules we should always use

@Override annotation on our overriding methods, @Override annotation force compiler to check if the method is a valid override or not.You can find complete code on this Github Repository and please feel free to provide your valuable feedback.